In the TED2025 conversation between Sam Altman and Chris Anderson, several key themes emerged regarding the future of artificial intelligence. Here’s an in-depth exploration of these topics, enriched with specific examples and insights from the discussion

- 1. AI Agents and Autonomy

- 2. Superintelligence and Safety

- 3. Economic Impact

- 4. Regulation and Governance

- 5. Ethics, Alignment, and Collective Decision-Making

- Final Thoughts

1. AI Agents and Autonomy

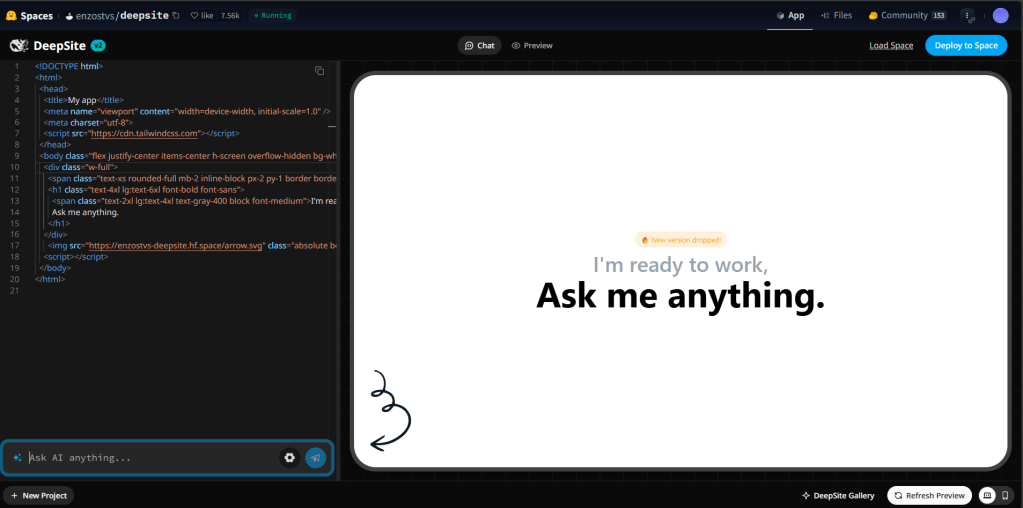

One of the biggest shifts we’re seeing is from static tools like ChatGPT today to dynamic, autonomous AI agents. These aren’t just chatbots—they’re systems that can take actions on your behalf: browsing the web, filling out forms, making bookings, even negotiating deals.

Sam Altman talks about a prototype agent called Operator. Think of it like an AI-powered executive assistant that not only understands what you want but can go out and actually do things for you, safely and effectively.

But here’s the challenge: if an AI agent can book a flight, what’s stopping it from emptying your bank account? That’s why OpenAI is developing what Altman calls intention mapping—basically, before the AI does anything, it lays out its planned actions, and you (or a supervising system) can intercept or approve them.

🧠 Example: Tell the agent, “Plan a weekend in San Francisco.” It’ll handle everything—hotel, restaurants, events—and you get a summary before anything is confirmed. No micromanaging needed.

2. Superintelligence and Safety

Altman is pretty direct about this: OpenAI believes superintelligence is coming, possibly within the next few years—not decades. That means AI systems smarter than the best humans in almost every domain.

With that comes real risk. So, OpenAI is investing in a mix of techniques: combining different AI modules (each an expert in a field), orchestrating them with a meta-system that knows when to use which part, and using feedback loops to improve over time.

He also calls for global regulation—an “IAEA for AI”—so that no single company or country runs unchecked toward superintelligence. This would involve audits, capability thresholds, and possibly even pause buttons at global scale.

⚠️ Why it matters: With superintelligence, mistakes aren’t just bugs—they’re existential risks. You don’t want a superintelligent system misunderstanding a goal or finding loopholes in our instructions.

3. Economic Impact

AI is already starting to reshape the economy, and that’s only accelerating.

Altman believes some jobs will absolutely be automated—but he doesn’t see it as pure loss. Like past tech shifts, AI will destroy some roles and create others. The key difference now is the speed and scale of that change.

For most people, AI will become a kind of co-pilot, taking on routine tasks so humans can focus on creativity, judgment, and interpersonal work—things machines still struggle with.

💼 Example: A junior analyst might use AI to crunch numbers and generate slides. But their real value becomes asking better questions, interpreting results, and communicating insights.

He also notes the importance of ensuring that AI-driven productivity gains benefit everyone, not just the top tech companies.

4. Regulation and Governance

Altman isn’t anti-regulation—quite the opposite. He argues that without smart, global coordination, AI could become a geopolitical mess.

The idea is to regulate capabilities, not companies. Don’t just lock startups out while the giants race ahead. Set clear thresholds: if a model can autonomously write code, navigate the internet, or act on its own, it needs to go through safety evaluations.

He also supports transparency and public input, rather than leaving AI development behind closed doors.

🏛 Example: Think of how we regulate airplanes or pharmaceuticals—not banning them, but requiring high safety standards, audits, and emergency procedures.

5. Ethics, Alignment, and Collective Decision-Making

One of the most exciting—and tricky—parts of AI is aligning it with human values. That’s not just “don’t do harm”—it’s also about helping humans make better collective decisions.

Altman talks about building systems that can simulate the impact of major policies or decisions using AI-driven agents representing different demographics and viewpoints.

He even suggests AI could help with a kind of algorithmic democracy, where it helps surface the best arguments and trade-offs—not just what’s popular or loudest.

🧪 Example: Before passing a new education bill, simulate its impact on families, teachers, budgets—then refine the plan based on what the simulation tells you.

Final Thoughts

What’s clear from this conversation is that Altman and OpenAI are preparing for a very different future—one where AI is not just a tool, but a partner, a decision-maker, and potentially even a superintelligent entity.

The opportunity is massive. But so are the risks. And the way we handle the next few years—especially with regard to governance, safety, and alignment—will shape how AI serves humanity in the long term.

Subscribe

Enter your email below to receive updates.

Leave a comment