1. Origins: 1950s–1970s – Laying the Foundations

The journey of AI began with visionary thinkers like Alan Turing, who, in his 1950 paper “Computing Machinery and Intelligence,” posed the question, “Can machines think?” This led to the development of the Turing Test, a criterion to evaluate a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human.

In 1956, the Dartmouth Conference marked the formal birth of AI as a field of study. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the conference introduced the term “Artificial Intelligence” and set the stage for future research.

🔍 Key Applications:

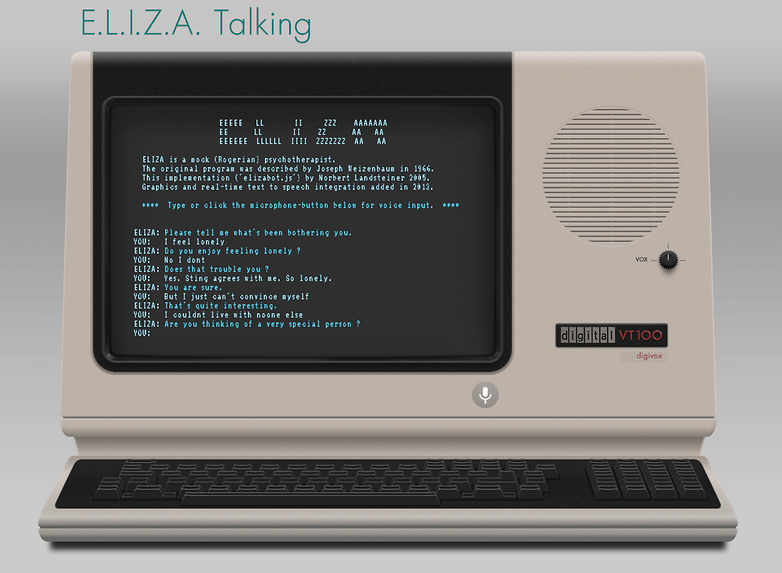

- ELIZA (1966): Developed by Joseph Weizenbaum, ELIZA was an early natural language processing computer program that simulated conversation by using pattern matching and substitution methodology. It mimicked a Rogerian psychotherapist, demonstrating the potential of machines to process human language.

- Shakey the Robot (1969): Created by the Stanford Research Institute, Shakey was the first mobile robot capable of reasoning about its actions. It combined computer vision, natural language processing, and the ability to navigate its environment, laying the groundwork for future robotics.

2. The AI Winter and Recovery: 1970s–1990s

Despite early enthusiasm, AI research faced significant challenges in the 1970s and 1980s, leading to periods known as “AI winters.” These were times of reduced funding and interest due to unmet expectations and limitations in computational power.

However, the development of expert systems in the 1980s reignited interest. These systems used rule-based logic to emulate the decision-making ability of human experts.

🔍 Key Applications:

- XCON (1980s): Developed by Digital Equipment Corporation, XCON was an expert system designed to configure VAX computer systems. It significantly reduced the time and errors associated with manual configuration, showcasing the practical benefits of AI in industry.

3. The Rise of Machine Learning: 1990s–2010

Advancements in computational power and the availability of large datasets in the 1990s paved the way for machine learning, a subset of AI focused on enabling machines to learn from data.

🔍 Key Applications:

- Deep Blue (1997): IBM’s chess-playing computer defeated world champion Garry Kasparov, marking a significant milestone in AI. Deep Blue’s ability to evaluate millions of positions per second demonstrated the potential of AI in complex problem-solving.

- Siri (2011): Apple introduced Siri, a virtual assistant capable of understanding and responding to natural language queries. Siri’s integration into smartphones brought AI into the daily lives of millions, highlighting the technology’s accessibility and utility.

4. Modern AI Era: 2010–2020

The 2010s witnessed significant breakthroughs in deep learning, a subset of machine learning involving neural networks with multiple layers. This period saw AI systems achieving human-like performance in various tasks.

🔍 Key Applications:

- AlphaGo (2016): Developed by DeepMind, AlphaGo became the first AI program to defeat a professional human player in the complex board game Go. It utilized deep neural networks and reinforcement learning, showcasing AI’s ability to handle intricate decision-making processes.

- GPT-2 (2019): OpenAI’s Generative Pre-trained Transformer 2 (GPT-2) demonstrated the ability to generate coherent and contextually relevant text, pushing the boundaries of natural language processing and generation.

5. The Future of AI: 2025 and Beyond

As we look to the future, AI is poised to become even more integrated into various aspects of society, from autonomous vehicles to personalized medicine. Emerging trends include:

- Multimodal AI: Systems capable of processing and integrating multiple forms of data (e.g., text, images, audio) to provide more comprehensive insights.

- Open-source AI models: Initiatives like Meta’s LLaMA are democratizing AI development, allowing a broader range of researchers and developers to contribute to and benefit from AI advancements.

- AI Governance and Ethics: As AI becomes more pervasive, establishing ethical guidelines and regulatory frameworks is crucial to ensure responsible development and deployment.

🔍 Key Applications:

- ChatGPT (2022): OpenAI’s ChatGPT has revolutionized human-computer interaction by providing conversational AI capable of understanding and generating human-like responses across various topics.

- Tesla Autopilot: Tesla’s Autopilot system exemplifies the application of AI in autonomous driving, utilizing real-time data to assist with navigation, lane changes, and adaptive cruise control.

Artificial intelligence has evolved from theoretical concepts to practical applications that permeate various facets of modern life. As technology continues to advance, the potential for AI to contribute positively to society is immense, provided that its development is guided by ethical considerations and robust governance.

Leave a comment